Today, most enterprises use a security log analytics solution or SIEM (Security Information & Event Management), but analytics are only as good as the data fed into your solution. If you’re missing data sources or are failing to extract full value from the data, you won’t see the big picture.

This is an issue new customers commonly mention to NXLog. That’s why one of our key goals is to provide a solid data collection layer that ensures all relevant data is collected and properly fed into the SIEM.

It sounds simple, but modern data is quite complex.

Data complexity

For a start, data now comes in different formats. While JSON is widely used for logging, other formats such as XML and syslog are also very common. Log data can also be structured or unstructured. Additionally, various protocols are used to transmit this data between sources and the destination.

To make matters more complex, the sheer volume of data is growing exponentially. The more data you have, the harder - and more crucial - it becomes to extract meaningful insights. You’re likely familiar with the big data challenge: analyzing and storing massive amounts of data at scale. This leads to high operational costs, which must be monitored and managed daily.

Security teams need high-quality data, refined and enriched, to act effectively. Whether preventing security incidents or conducting forensic investigations, data analysis is crucial. If you manage to reduce data volume effectively, your analytics will become significantly faster.

There is a clear trend shift from log data to telemetry data pipeline management on the market. Telemetry data expands the range of relevant data to include logs but also metrics, and traces generated by IT system endpoints, cloud applications, and other sources.

Historically, logs were handled by log management systems and metrics by monitoring systems.

Major SIEM, APM and observability platform vendors — such as Microsoft Azure Sentinel, Google Security Operations, Elastic, Dynatrace, and Datadog — initially focused on either security or operations. But, as data and market needs evolved, convergence has become the trend.

Telemetry pipelines and data management systems address the data variety by collecting, processing, and routing the data in a single solution. Vendors now offer unified solutions that support multiple teams — from IT operations, DevOps, and DevSecOps to compliance officers — using a single, unified, telemetry data pipeline.

Managing telemetry data is one thing, but extracting actionable insights is another. At the end of the day, it’s all just data but not all data is the same. To troubleshoot outages or optimize performance, you need clean, valuable data forwarded into your observability solutions.

Telemetry pipeline architecture

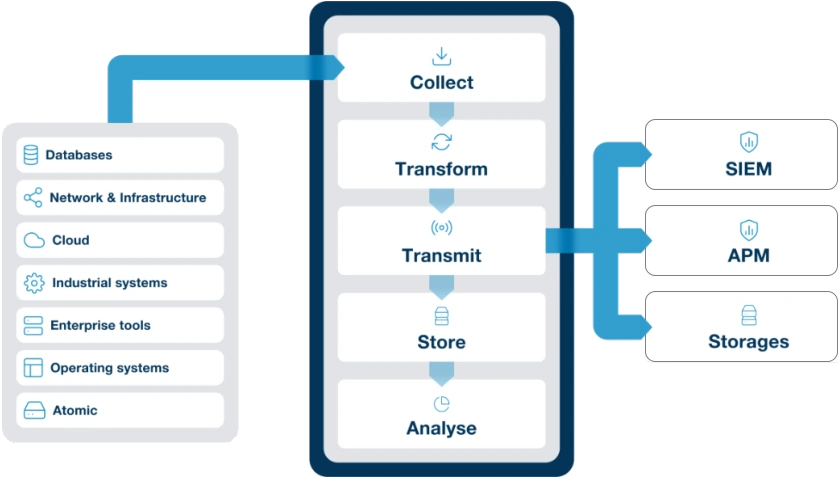

So, what does the architecture of telemetry pipelines look like? The core of a telemetry pipeline is a collection layer that gathers data from various sources.

In larger organizations it is common to have heterogeneous data sources - different operating systems such as Linux, Windows, macOS, and various applications running on-premises and in the cloud, virtualization solutions, databases, and so on. Enterprises also require visibility on the infrastructure layer, including network devices and storage solutions.

Once data is picked up, it can be transformed, filtered, normalized and enriched. Then, data is routed to one or more destinations where it is stored and analyzed.

It is important to emphasize that the data collection layer is crucial to access the full scope of available data. For this purpose there are two options: using an agent or going agent-less.

Installing an agent gives more flexibility because it offloads the transformation, enrichment, and filtering stage to the collection layer. With an agent, you’re also able to collect metrics and traces as well as other highly relevant and important data for security data monitoring, such as pulling data from files, databases, file integrity monitoring, passive network monitoring, monitoring open ports, and so on.

If it’s not possible to install agents on your devices, this data can still be collected in an agentless way. This is currently supported in most devices and operating systems with a log forwarding protocol, such as syslog, Windows Event Forwarding, or REST APIs.

You can use agents, an agentless layer collection, or a hybrid solution using both methods.

For telemetry pipeline management solutions, you need to be able to store and then act upon the data that you collect. This allows the added value of actual observability of your pipeline through search, reporting, dashboards and alerting. It’s important to highlight the intended vendor-agnostic nature of such telemetry pipeline systems, to allow integration with multiple vendor solutions, SIEMs, observability tools, or other monitoring systems.

If you have a large number of endpoints in your organization, managing them becomes crucial. It’s important to have a centralized management console that allows you to oversee all your data sources and agents deployed on your endpoints. These agent management solutions must also provide health checks in addition to configuration management and deployment.

Benefits of telemetry pipeline management systems

These solutions offer you full visibility and situational awareness for operational efficiency and allow you to make data-driven decisions, for instance to predict unexpected outages and eliminate them. These systems provide real-time monitoring of the data that is being ingested and processed.

Cost optimization is crucial in today’s economic environment, which is why customers are looking for vendor-neutral solutions that they can use with various SIEM and observability tools and reduce their license costs because these solutions are typically licensed based on data volume.

When we speak about cost optimization, it’s not just savings on license costs, but because this big volume of data creates load on the network and also on the storage side. It’s important to store data efficiently in a compressed format (but still make it accessible and searchable) as well as transfer that data efficiently over the network.

The final benefit is the enhanced security and compliance coverage. For regular reports and audits, it is both essential to have all the data accessible and easily locating that data when required. Many compliance standards mandate having proper log collection and a solid security data management system in place.

Common use cases

What are the most common use cases where a well-managed telemetry pipeline excels when compared to traditional, separate operational and security analytics solutions?

One of the scenarios is operational logging and application performance management. As IT architectures become increasingly complex - running on-premises, in the cloud, and across distributed systems - tracing requests across these environments to diagnose issues is a growing challenge. In such a scenario, a telemetry pipeline can help identify system failures and performance bottlenecks.

The second important scenario is focused on security. This is what traditional SIEM solutions used to cover to enable faster threat detection and incident response. Solutions such as UEBA (User and Entity Behavior Analytics) offer more by including fraud detection by analyzing users' behavior to differentiate a legitimate user action from intrusions with stolen credentials. Telemetry pipeline solutions can effectively act as a pre-processor for SIEM solutions.

Compliance standards place more and more controls on large and medium-sized enterprises, mandated by standards such as ISO27001, SOC2, PCI DSS, NIS2, and others. Most compliance regulations explicitly require proper logging, and management of log data, and also the protection of personally identifiable information with anonymization, data masking, or encryption of user data. These also mandate a data retention policy to store important data over time, even for several years.

How NXLog responds to the telemetry data challenges

Let’s take a closer look at how NXLog has been shifting from log management to telemetry data management, and what our technological approach is to help eliminate data chaos beyond security data.

NXLog Platform is a unified telemetry data collection, storage, and agent management solution, that can be used as a standalone or holistic solution to complement your SIEM or Application Performance Monitoring and Observability (APM).

NXLog Platform’s agent management provides oversight over your telemetry data collection, processing and data forwarding. Our agent, NXLog Agent, is designed to efficiently and reliably process data from thousands of sources, including comprehensive operating system coverage. NXLog Agent’s versatility does not end in the collection spectrum, as it provides a wide range of data transformation, processing, and forwarding capabilities. As such, NXLog Agent can handle the full scope of telemetry data.

NXLog Platform can efficiently handle a large number of agents, with auto-enrollment, configuration templates and simplified management of large-scale deployments. NXLog Agent instances are easily configured in NXLog Platform with the intuitive configuration builder and ever-growing suite of configuration templates. NXLog Platform also provides its own monitoring layer, bringing a health-check holistic view of your agent fleet’s performance. This allows you to handle quickly growing - and changing - agent fleets with a centralized configuration and monitoring tool.

NXLog Platform is ready for the challenges of data storage, with a schemaless storage system for flexibility and efficient high compression implementation for storage optimization with no access performance impact. NXLog Platform’s telemetry data management controls data storage and provides data search, dashboards and analytics to gain insights from your data.

NXLog Platform gives you an in-depth visualization of the health and performance of your agent fleet and you can query the telemetry data with NXLog Platform’s powerful SQL-based data visualization customizable functionalities. Customization includes shareable log searches and dashboards, allowing the centralized creation of a monitoring environment to share with your team. As with everything else in NXLog Platform, these analytical tools are built with scalability in mind, to support large deployments.

Conclusion

The ever-increasing volume of telemetry and log data presents a challenge for all organizations, especially for larger enterprises. Organizations need to be able to deal with enormous amounts of data - reduce volume and improve quality to get useful actionable data points from their analytics. This is getting harder each day, but sooner or later you need to solve the big data problem.

Hopefully we were able to give you a brief overview of why telemetry data pipeline management is - or will soon be - crucial for your organization, the key areas to pay attention to when building your own pipeline, and how NXLog is ready to help.