European electric power system operators supply around 2800 TWh of electricity per year and manage around 10 million kilometers of power lines - more than ten round trips to the Moon. Such electric travel is impossible without electric substations, an essential component of a power grid. Its automation becomes ultimately digitalized, so requires proper monitoring both for operational and security purposes. Let’s take a look at how a unified log collection pipeline embeds into power automation systems and helps make sure the lights stay on.

Going from analog to digital power automation

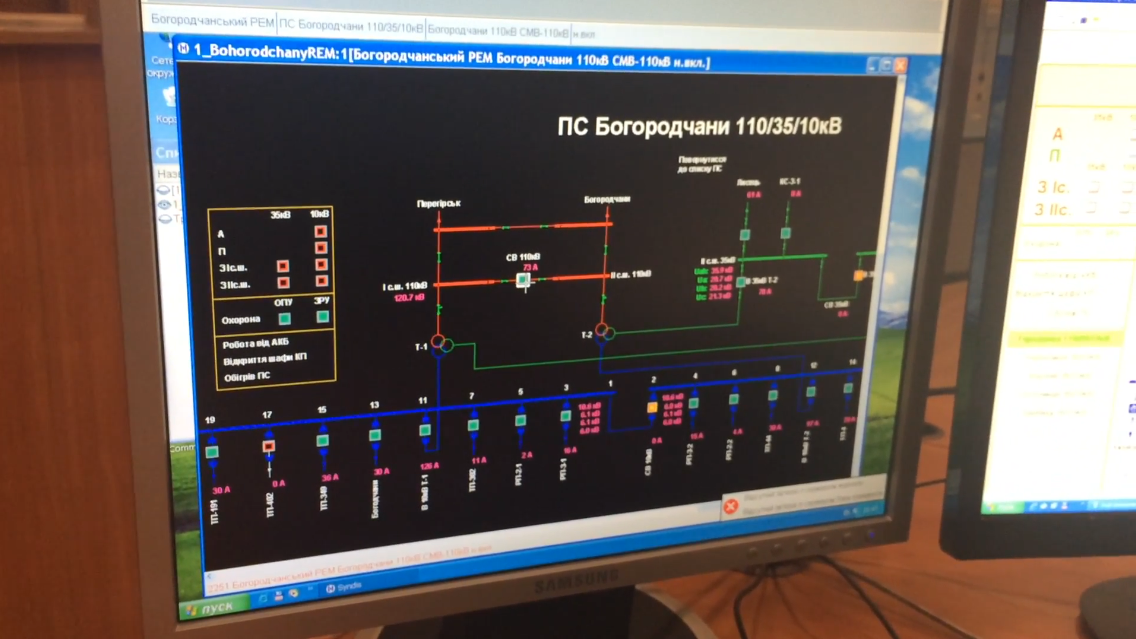

Electric substations transform voltage and control power transmission and distribution according to demand, so it’s the critical element between power production and power consumption (both on an industrial or household scale). Legacy substations were completely analog and built literally on top of many kilometers of copper cabling under the ground.

This worked for decades, though there were many downsides. Copper is expensive, operation measurement capacity is limited (when each control signal requires dedicated wiring), and aging equipment has little health-check communication capabilities, requiring scheduled on-site visits to collect the necessary information. Additionally, conventional substation design posed a huge safety risk to engineering staff and equipment.

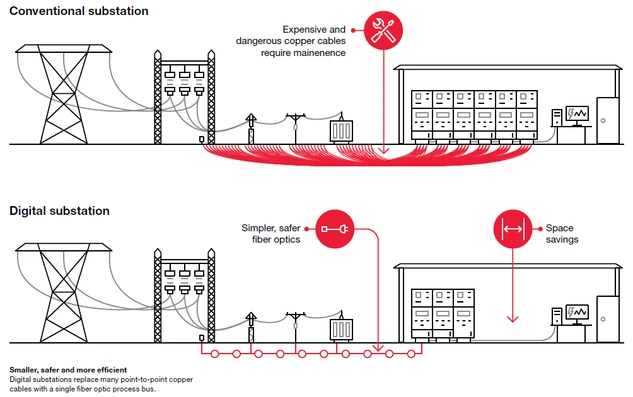

Digitalization made it possible to reduce the hot-wiring of high-voltage and control equipment, and let the grid run with greater reliability and lower safety risks. It was achieved by implementing smart digital components with Ethernet-based communication capabilities, and by replacing point-to-point copper wiring with fiber-optic connectivity.

And the savings are huge with digital. According to Belden, for a small to medium-sized substation site, it was estimated that copper usage could be reduced by nearly 30 km (more than 18 miles) and replaced by only 1.5 km (or less than a mile) of optical fiber. Similar savings could be found in operational spending, as new connectivity capabilities help to retrieve operation data online, optimize utility performance in real-time, and allow engineering teams to work off-site, reducing the number of maintenance visits and lowering the safety risks.

The drawbacks of digitalization

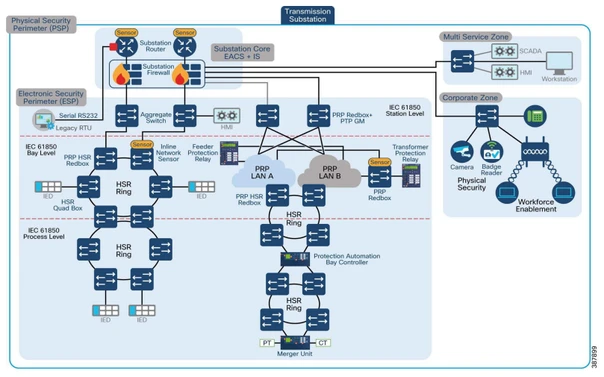

However, digitalization demands a complex IT infrastructure, composed of many interconnected elements, such as IT switches, routers, firewalls, workstations, servers, specialized RTUs, IEDs, relays, terminals, hardware NTP units for time synchronization, and sophisticated SCADA software onboard to operate the whole system.

Digital substation designs provide a high standard of technology control and robustness with operation level isolation, redundancy, and safety mechanisms. It’s no surprise that, being now a full-fledged IT system, a digital substation requires new digital monitoring processes to be established to manage failures and recover in time. As a part of a comprehensive digital power grid, substations have to be monitored in a centralized fashion to achieve the desired level of efficiency and reliability. Still, most of the architecture from different automation vendors leaves gaps in monitoring, increasing recovery time as engineers are required to manually troubleshoot across dozens of components. And that becomes even worse during a critical incident.

Let’s have a look at how centralized log collection helps both operations and security teams.

Unified log collection for operational technology (OT)

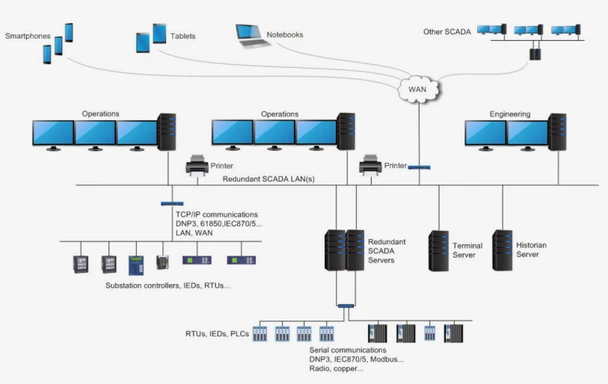

Operations teams generally have redundant access to all OT-related data (like measurements, metrics, sampled values, etc.) by collecting, processing, and storing it on Historian servers for analysis by SCADA- or MES-level applications.

The only data that is typically missed in continuous operation is IT operations data, like Windows event logs, SCADA software logs, audit logs from network equipment, including PLCs, and other typical IT data. While being extensively used by OT engineers during on-site system configuration and construction works, that data is underutilized and left unmanaged during normal operation.

So, in the case of failure, an engineer does have a full trace of OT data collected and available via SCADA software. But still, they have very limited access to system logs and SCADA software logs, some of which may be the only source of information about the problem for the whole OT process. That inconvenience could be a reasonable trade-off for a small single utility, but when it comes to a power grid with thousands of utilities operating in a highly coordinated fashion, it becomes exceedingly insecure and risky.

There is still a problem with collecting these logs. Substations are built with unique, complex, and heterogeneous infrastructure compiled from many hardware and software components, each with a different approach to logging. For instance, useful SCADA software logs could be stored in plain text files with a custom format, in database structures, or inside operating systems (like Windows event logs). PLCs/RTUs can also provide audit events via syslog or an FTP-like interface. To better understand your options when building a syslog-based pipeline on Unix and Linux, see our comparison of rsyslog vs syslog-ng.

What is necessary is a unified log collection solution that automates log collection and captures all required data from disparate sources, centralizes information, and allows security teams to quickly perform root-cause analysis during troubleshooting, recover faster, and predict failures ahead of time.

The key requirements for such a solution to enable a unified log collection pipeline in industrial control and critical infrastructure environments are tough:

-

Support for new and legacy operating systems, including Windows and Linux

-

High reliability and low footprint on system resources

-

Capabilities to integrate with specialized hardware like PLC, RTUs, and SCADA software

-

Support for many source types (text files, databases, network endpoints like syslog, etc.)

-

Support for many destination types (text files, databases, network endpoints like syslog, etc.)

-

Support for many data formats (like CSV, XML, JSON, etc.), including custom formats

-

Ability to apply extensive filtering before sending data to minimize network footprint

-

Support for different log collection architectures, including both agent-based and agentless

Whether an organization decides to employ native OT vendors' capabilities for log collection (which are typically very limited), implement a full-scale log management product, or even design an in-house solution based on open-source components - it’s worth the effort. Having centralized the data from SCADA software logs, PLCs, and infrastructure components like Active Directory, DHCP, DNS services, and operating system level logs, OT and IT engineers can quickly access events and find a solution to recover from technology process failures quickly.

Log collection and OT security

Another side of digitalization is cybersecurity. Early in 2014, the U.S. Federal Energy Regulatory Commission (FERC) reported an analysis found the U.S. could suffer a blackout across the country for weeks or months if saboteurs simultaneously targeted just nine of the 55,000 power substations, threatening the collapse of the entire national grid.

We have already seen such an encounter in real life back in 2015 during a major APT-style cyberattack on the Ukrainian power grid, when hackers succeeded in penetrating electrical utilities, resulting in power outages for roughly 230,000 households.

Subsequent investigations found that attackers kept persistence in the system while being unnoticed for more than half a year before launching the actual cyberattack. The investigation itself took months to reveal the details of that malicious outbreak because it took time to manually gather all the logs from systems that survived during the attack and make an analysis in the aftermath.

With the videos and material published after the fact, we see that during the incident, the compromised systems had all OT controls working properly, but engineers didn’t understand what was going on or plan actions accordingly. But while there was a lack of proper training, no OT/SCADA system is built to provide enough security information - it’s just not the objective. So, to achieve an appropriate level of situational awareness, it’s necessary to have ongoing log collection and analysis in place.

Typically, both SCADA software and smart devices (like PLCs, RTUs, and others) log operation and audit data in some way. It could have incomplete or partial logs, it could be implemented weakly, it could be not well documented, but even still, it’s there, and these logs can help with ongoing security monitoring. Especially, if you correlate that data with events from other IT infrastructure services, like Active Directory or just regular Windows event logs.

Security monitoring, which is based mainly on logs analysis, is a part of literally every cybersecurity framework you pick - NIST CSF, ISO27001, NERC CIP, IEC 62443 (ISA 99), IEC 62351, and so on. And leading industrial automation vendors have already incorporated security monitoring practices in their security guides.

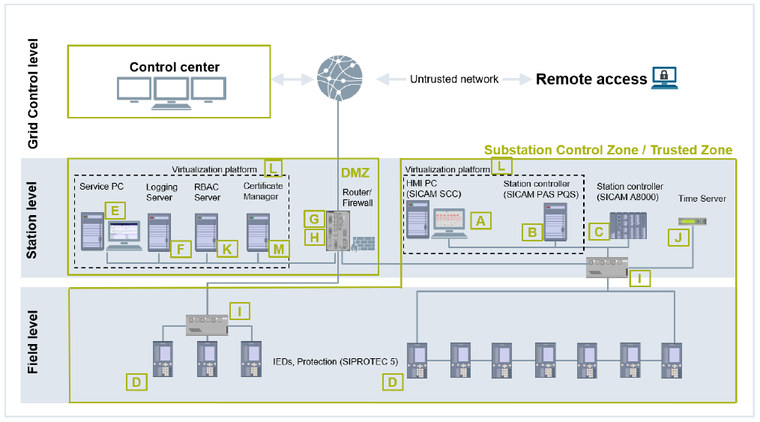

For instance, the System Hardening for Substation Automation and Protection guide by Siemens highlights core security principles for a digital substation site:

-

Network Segmentation

-

Secure Configuration

-

Least Privilege

-

Continuous Monitoring

Regarding principle 4, Siemens engineers notice:

“One more important principle is the monitoring of your network. To identify attacks or suspicious irregularities, it is important to have a central logging server collecting critical information from all involved components of the secure substation like dropped packets of a firewall or error entries in a Windows event log system.”

As a part of the substation architecture, Siemens suggests implementing a central logging server “for an easy overview and a fast response” and tracking valuable events from both the field and station level of the site, including:

-

Failed/successful login

-

Logout

-

Changes in user/password management (user added, deleted, modified, etc.)

-

Software/firmware updates

It’s mentioned there, that establishing central log collection for a diverse set of network devices, software and Microsoft products (like Windows) is not an easy task, so professional log collection tools, like NXLog Enterprise Edition, are recommended to accomplish that.

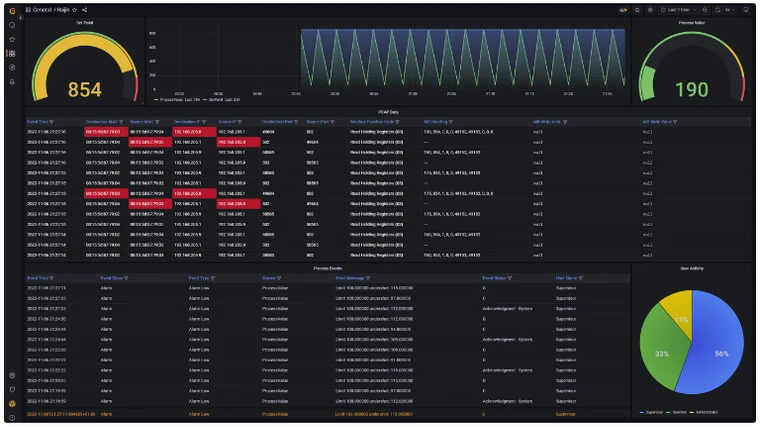

A central logging server can be equipped with its own log analysis mechanisms. It allows an operations team to leverage the data, make decisions, and act autonomously in the case of a security incident. Another scenario is when data is sent to a security system like SIEM or SOAR for in-depth analysis. That allows for sophisticated threat hunting but requires a dedicated team of security experts. Both scenarios should be considered for implementation as joint countermeasures.

How can NXLog help?

NXLog Enterprise Edition is a professional solution that allows you to build a unified log collection pipeline by centralizing audit and operation events across your whole infrastructure, including IT and OT components.

-

NXLog Enterprise Edition is a lightweight log collection pipeline, proven to be used in OT networks based on various technologies from Siemens, Schneider Electric, and others.

-

With its ultimate events processing system, NXLog Enterprise Edition allows filtration and routing of security events both from the system level (like Windows, Linux, AIX, and legacy systems) and SCADA application level (like Siemens WinCC, Siemens Step7, Siemens SICAM, and others).

-

Supports both agent-based and agentless collection, critical for heterogeneous environments like OT.

-

Supports all major SIEM and APM systems for log forwarding, like Splunk, IBM QRadar, Google Chronicle, Microsoft Sentinel, Microfocus ArcSight, Datadog, Graylog, and others.

-

NXLog Enterprise Edition provides generic network monitoring capabilities to introduce insightful data for the security team without leaving the SIEM dashboard. Looking for a versatile log collection solution for your OT environment? Try NXLog Enterprise Edition for free today or get in touch to learn more.