Logs are a record of the internal workings of a system. Nowadays, organizations can have hundreds and, more regularly, thousands of managed computers, servers, mobile devices, and applications; even refrigerators are generating logs in this Internet of Things era. The result is the production of terabytes of log data—event logs, network flow logs, and application logs, to name a few—that must be carefully sorted, analyzed, and stored.

Without a log management tool, you would need to manually search through many directories of log files on each system to access and extract meaning from these millions of event logs. Historically, writing a script to automate log collection at set timetables was the norm, but this approach is not scalable across modern systems and environments. Even using syslog—a UNIX program that copies logs to a central server—is operating system dependent and not easily configured.

This is where log aggregation comes in.

What is log aggregation?

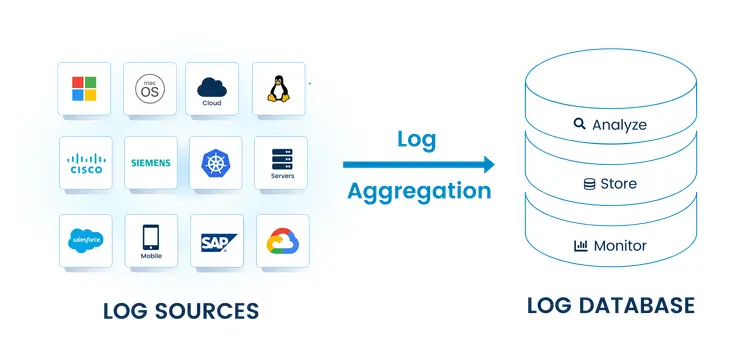

Log aggregation consolidates logs from different sources into a central location. When performed correctly, logs from all devices are merged into one or more streams of data, depending on how they will be analyzed. Log aggregation turns confusing or unintelligible logs into meaningful log data which can be more effectively synthesized and understood by your organization. Centralized logging simplifies the log management process. It also allows you to harness real-time log data for faster event response time and a deeper understanding of your organization’s digital environment.

The various stages of log aggregation

- Collection

-

Determining which devices, types of logs, and mode of collection is the first step. For workstations and servers, you normally need to install a logging agent. Window Event Forwarding (WEF) can be used for very basic log aggregation on Windows without the need to install an agent, but it has some drawbacks. And, like syslog, it is operating system dependent.

- Parsing

-

Beginning with data ingestion, the log is collected in its base form. Most modern logs already contain structured or semi-structured data. However, even with structured data, some fields may contain embedded fields of information that can be parsed into new fields, making the event records even more valuable later on during the analytical stage. For unstructured or semi-structured data that is known to contain such fields, it is important to parse the fields sooner rather than later, ideally on the same host where the logs are generated. A workstation will need only negligible resources to parse its own data in real time, while an intermediate or centralized log collection server might become overloaded if given the task of processing the logs from hundreds or thousands of hosts it is receiving concurrently.

- Standardizing

-

With the many different forms that a log can take, a standardization process is necessary. This can include data normalization. The ability to merge specific types of logs, like DNS lookups from macOS and Windows hosts, by normalizing data can greatly reduce the complexity of queries once a SIEM has ingested these DNS client events that now have a common schema. You may also need to standardize logs to a data format like JSON to make them digestible by your SIEM.

- Modifying

-

Logs can be modified during the aggregation process. In some circumstances, you may want to redact or remove certain key-value fields before further processing the log data—for example, censoring sensitive user information like passwords or encryption keys. Similarly, specific fields may contain extraneous information that can be truncated. Maybe some log sources are valuable to your organization, yet they have some unimportant fields. Truncating or dropping entire fields can conserve network bandwidth and disk storage can be conserved. Fields can also be added to enhance the data quality of your logs, like timestamps and hostnames. This is especially important for log sources from macOS workstations, since they rarely contain a hostname field or any other field that can be used to identify the host that generated the logs. This essential information is required for performing aggregation queries that provide you with metrics like how many (or what percentage) of hosts have experienced any given security event within a specific time frame. Using a standard query with the hostname information would enable you to identify which workstations were affected by the event.

- Filtering

-

You probably will not need to collect duplicated logs showing the same information hundreds of times, informational logs of a benign nature, or debug messages that are of interest only to the developers who maintain the software generating the events. These can all be dropped at the source, thereby decreasing the network throughput of unnecessary logs and, by extension, increasing the throughput of relevant logs. This means more efficient data collection and a huge boost to the quality of logs you are sending to your SIEM.

- Shipping

-

This is the final stage of the log aggregation process. Once the logs have been processed, they are ready for forwarding over the network—known as “shipping”—to their final destination. The confidentiality of logs in transit is addressed with secure encryption protocols like TLS. Typically they are shipped to a SIEM that will ingest them. Once in the SIEM you can run correlations on them, generate metrics, and be able to visualize events. SIEMs are not always the final resting place of aggregated logs. They can be shipped to a database like Raijin, which specializes in log data and provides a foundation for developing real-time data analytics.

|

Note

|

Unlike most logging agents, NXLog can parse, standardize, modify, and filter during the collection stage, on the fly, while it is shipping the processed log data to the centralized log destination, on any modern operating system, to almost any SIEM. This is just one of the ways NXLog is able to achieve such high performance. |

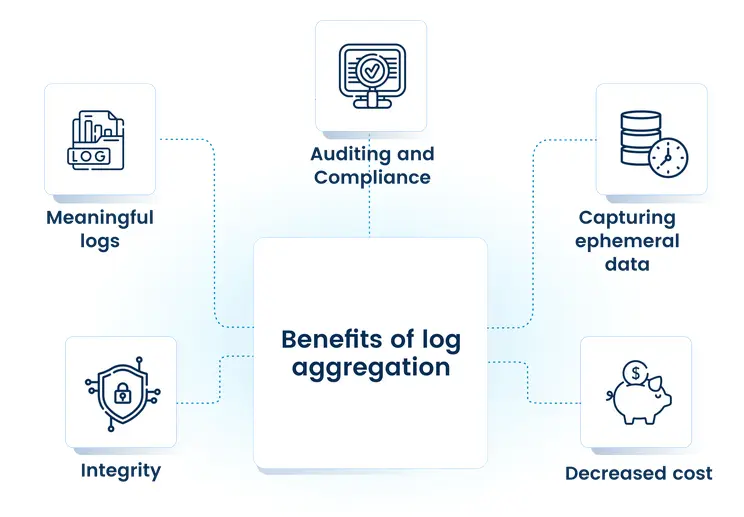

The benefits

A solution that can effectively implement all stages of log aggregation can be an incredibly effective tool in uncovering the real value your organization’s log data is hiding in its current state. There are many benefits to incorporating log aggregation software into your log management strategy.

- Decreased throughput and storage requirements, decreased costs

-

Many SIEM platforms are priced based on throughput, whether EPS (Events Per Second) or GB/day (Gigabytes per day). Additionally, archival space is priced per GB of disk space used. By filtering out the noise, you can significantly decrease the number of unnecessary logs sent to your analytics and storage platforms, thereby lowering the running costs of these systems.

- Meaningful logs

-

Logs are only useful if meaning can be extracted from them. Log aggregation platforms can parse free-text event messages into meaningful, structured data that your analytics platform can more efficiently use. This results in simpler queries that run faster, which in turn means faster notifications and reduced response times for your security operations to investigate an event.

- Capturing ephemeral data

-

More frequently, logs are being created ephemerally—especially in a distributed, cloud-based environment using systems like Docker and Kubernetes. These logs must be captured within a certain period or the information will be lost. A log aggregation solution can gather these logs at creation time and ship them to a permanent store.

- Integrity

-

Aggregating logs in a central location increases the integrity of your logs. In the event of a breach, log messages can show the steps that an attacker took. Most attackers attempt to cover their tracks by deleting or manipulating logs. By centralizing data, log aggregation tools counteract these methods.

- Auditing and compliance

-

Many regulations, including PCI-DSS and HIPAA, require logs to be aggregated and stored for a set retention period. Your auditors may also require this of you. Log aggregation allows your organization to conform to these requirements. Raijin database is optimized for log data, integrates seamlessly with NXLog, and supports expression-based partitioning that can automate this task for you.

Technical requirements

To create these tangible benefits for an organization’s log management strategy, a log aggregation solution should fulfill a set of essential requirements.

- Scalability

-

With the potential for thousands of devices across an organization’s infrastructure, a system for aggregating logs should be easy to deploy at enterprise scale.

- Multi-platform support

-

Most organizations' IT infrastructure employs multiple operating systems, containerized applications, embedded systems, a wide range of third-party solutions, and more. A log aggregation solution should be modular, and work equally on each system with the same degree of autonomy and accuracy.

- High throughput

-

Millions of events can be generated in short spaces of time. Any log management platform not capable of sustaining an ingestion rate that meets or exceeds the average rate of logs generated is not a viable solution. This is an important requirement to consider if you want to prevent loss of valuable data.

- Integration

-

Integrating log aggregation with third-party solutions should be simple and complementary. The ideal log aggregation solution will need to support a wide variety of SIEM platforms, security tools like firewalls and IDPS systems, and embedded devices on both the input and output sides. They should work together to create a better log management service.

- Configuration

-

Enterprise-level software is often complicated to configure. Log aggregation platforms should be straightforward and intuitive, based on familiar concepts, languages, and configuration styles that are familiar to IT professionals.

- Documentation and user support

-

Incorporating log aggregation into an existing log management process requires careful planning and testing prior to deployment. As such, a solution should provide well-written, easy-to-understand documentation that is frequently updated and has a community of technical professionals ready to help.

Aggregating logs with NXLog

NXLog is a leader in the log aggregation space. We offer both an open source NXLog Community Edition and a full-featured NXLog Enterprise Edition of our log aggregation platform, empowering organizations to utilize their log data to the fullest.

NXLog has a modular, multi-threaded architecture for high performance, low latency, and throughput of up to 100,000 events per second. We support all major operating systems with our highly-scalable logging agent that integrates with best-in-class SIEM and third-party analytics platforms. With NXLog’s emphasis on modular design and straightforward Apache-style code for creating configurations, even the most complex configurations are easy to create and to understand. In short, NXLog is an easy-to-use logging agent that requires only minimal system resources, yet delivers extraordinary results.

At NXLog, we put the same effort into our documentation that we put into our software products so that you can learn how to use our products to their fullest and find the answers that you need.